The Open AI Platform for _

One fullstack platform for Compute, Inference, Fine-tuning, and RAG on Open Source Models.

Unlock $1 free API credit on first recharge - generate up to ~4M tokens

What customers build with Qubrid

Get instant access to the most popular OSS models - optimized for cost, speed, and quality on the fastest AI cloud

Enterprise OCR & RAG

Convert complex documents into structured, searchable knowledge with high-accuracy OCR and scalable RAG pipelines. Built for large volumes, domain-specific data, and production AI workloads.

Learn MoreAI Automation & Workflows

Design, run, and scale automated AI workflows across models, tools, and data sources - with reliable orchestration and production infrastructure.

Learn MoreCustom Built Agents

Design, deploy, and scale intelligent AI agents that plan, reason, call tools, and execute multi-step tasks - powered by Qubrid’s high-performance AI infrastructure.

Learn MoreClinical & Research Analysis

Accelerate clinical and research workflows with AI-powered document analysis, data extraction, and knowledge retrieval - built for accuracy, scale, and domain-heavy datasets.

Learn MoreMarketing Automation

Automate prospect research, personalization, and outreach workflows using AI models and scalable inference - built for high-volume, multi-channel marketing operations.

Learn More

How Customers  do it

do it

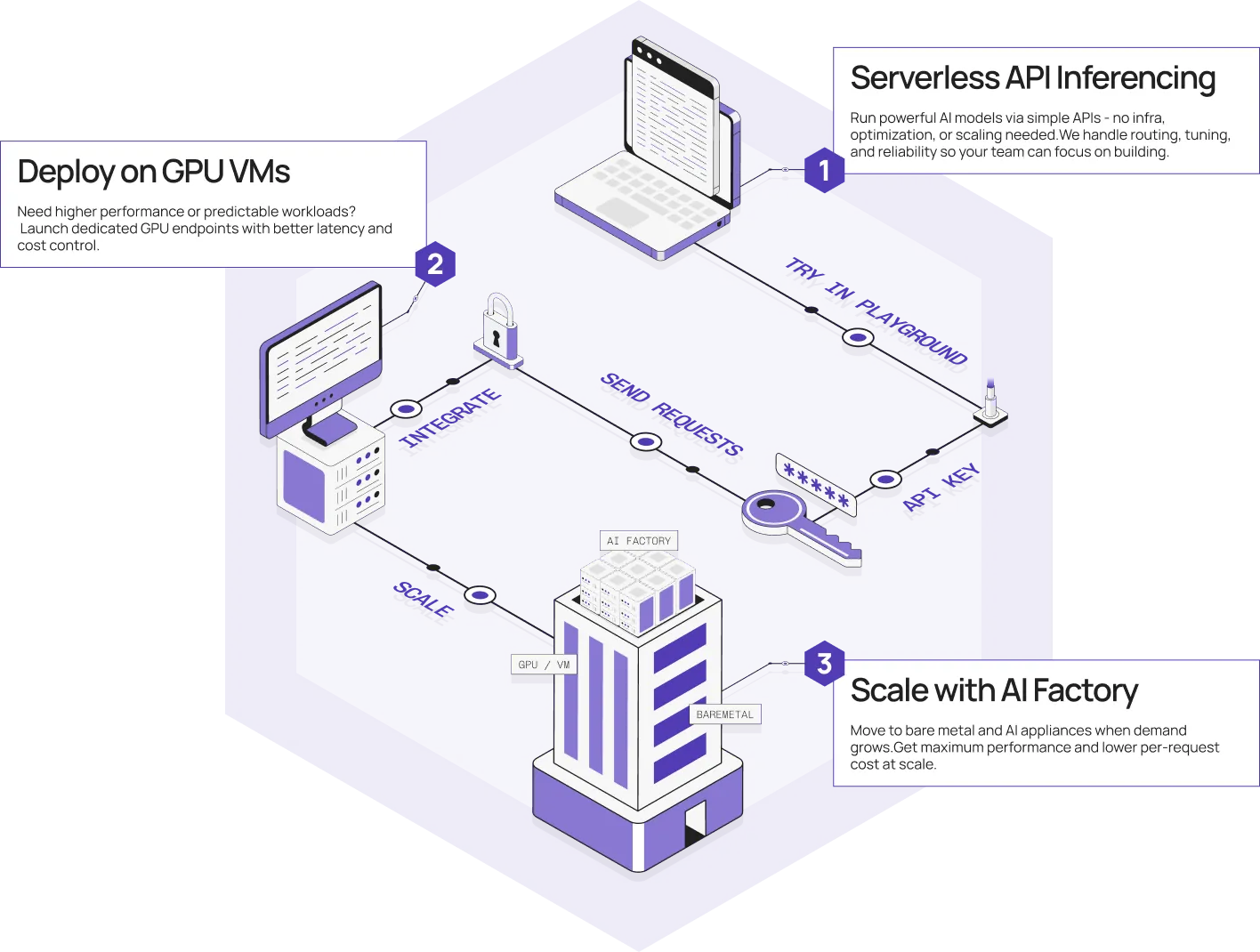

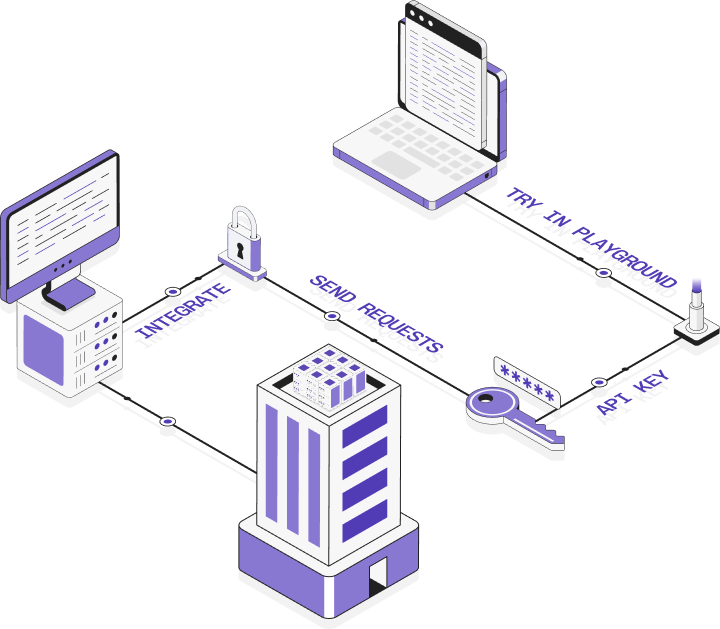

01. Serverless API Inferencing

Run powerful AI models via simple APIs - no infra, optimization, or scaling needed. We handle routing, tuning, and reliability so your team can focus on building.

02. Deploy on GPU VMs

Need higher performance or predictable workloads? Launch dedicated GPU endpoints with better latency and cost control.

03. Scale with AI Factory

Move to bare metal and AI appliances when demand grows. Get maximum performance and lower per-request cost at scale.

Blazing fast  inferencing. Serverless APIs

inferencing. Serverless APIs

Get instant access to the most popular OSS models - optimized for cost, speed, and quality on the fastest AI cloud

Our partnership with NVIDIA enables us to bring you the best infrastructure solutions to accelerate AI

Our partnership with NVIDIA enables us to bring you the best infrastructure solutions to accelerate AI Deploy your AI workflows on Qubrid's GPU VMs

High-performance NVIDIA GPUs with flexible scaling

AI/ML Templates

Choose from ready-to-use AI/ML environments preloaded with popular frameworks like PyTorch and TensorFlow. Launch faster without setup overhead and start building immediately.

On-Demand Availability

Spin up latest-generation GPUs instantly when you need them. No long commitments - scale compute up or down based on workload demand.

SSH Access

Get secure SSH and full root access to your GPU instances. Install custom libraries, run scripts, and manage workloads with complete control.

Configurable Storage

Attach high-speed storage volumes tailored to your workload size. Easily expand capacity for datasets, checkpoints, and model artifacts.

Auto Stop

Automatically shut down idle instances after a set time to reduce costs. Perfect for experiments, testing, and burst workloads.

Checkout our Latest GPUs

Scale with AI Factory

Infrastructure | Hosting | Deployments